Introduction

Coaching laptop imaginative and prescient fashions requires exact studying charge changes to steadiness pace and accuracy. Cyclical Studying Price (CLR) schedules provide a dynamic strategy, alternating between minimal and most values to assist fashions study extra successfully, keep away from native minima, and generalize higher. This methodology is especially highly effective for advanced duties like picture classification and segmentation. On this put up, we’ll discover how CLR works, in style patterns, and sensible tricks to improve mannequin coaching.

Defining Studying charge

The training charge is a elementary idea in machine studying and deep studying that controls how a lot a mannequin’s weights are adjusted in response to the error it experiences throughout every step of coaching. Consider it as a step dimension that determines how rapidly or slowly a mannequin “learns” from information.

Key Factors About Studying Price

Function in Coaching:Throughout coaching, a mannequin makes predictions and calculates the error between its predictions and the precise outcomes. The training charge determines the scale of the steps the mannequin takes to regulate its weights to reduce this error. This adjustment is often achieved by way of an optimization algorithm like gradient descent.

2.Setting the Proper Tempo:

Too Excessive: A excessive studying charge would possibly result in very massive steps, inflicting the mannequin to “overshoot” the optimum values for weights. This will make coaching unstable and stop the mannequin from converging, resulting in excessive error.Too Low: A low studying charge means very small steps. The mannequin will alter weights slowly, which may make coaching take longer and even threat getting caught in native minima—suboptimal factors within the error panorama.

3.Discovering a Steadiness:

The perfect studying charge permits the mannequin to make significant changes with out overshooting the optimum level. Setting this charge properly can assist the mannequin converge sooner whereas additionally making certain that it reaches the very best efficiency.

4.Dynamic Studying Price Changes:

To enhance efficiency, varied methods dynamically alter the educational charge throughout coaching. For instance:

Studying Price Decay: Regularly lowers the educational charge over time.Cyclical Studying Price (CLR): Alternates the educational charge inside a specified vary to permit extra dynamic studying, which could be particularly useful in advanced duties like laptop imaginative and prescient.

Picture credit score : https://cs231n.github.io/neural-networks-3/#annealing-the-learning-rate

Why Studying Price Issues

The training charge immediately impacts a mannequin’s means to study effectively and precisely. It’s one of the essential hyperparameters in coaching neural networks, impacting:

Coaching Pace: A very good studying charge hastens coaching by taking appropriately sized steps.Mannequin Efficiency: A well-tuned studying charge helps attain higher accuracy by avoiding unstable coaching or untimely convergence.Optimization Effectivity: Dynamic approaches like CLR can present a balanced tempo for exploring and fine-tuning, doubtlessly reaching higher outcomes, particularly in duties with advanced information.

When coaching deep studying fashions, particularly in laptop imaginative and prescient, deciding on the fitting studying charge could make or break a mannequin’s efficiency. Historically, studying charges begin excessive and step by step lower all through coaching.

Nevertheless, a extra dynamic strategy often known as Cyclical Studying Price (CLR) Schedules has confirmed efficient for sooner convergence, higher accuracy, and improved generalization. Right here’s a more in-depth have a look at CLR schedules, their advantages, and why they’re remodeling laptop imaginative and prescient mannequin coaching.

Understanding the Fundamentals: What’s a Cyclical Studying Price Schedule?

A studying charge (LR) controls how a lot a mannequin adjusts its weights throughout every coaching step. In a cyclical studying charge schedule, the LR doesn’t simply lower monotonically however as an alternative oscillates between a minimal and most boundary all through coaching. This oscillation helps the mannequin discover and study dynamically throughout coaching phases.

Some in style CLR patterns embrace:

Triangular: The LR linearly will increase to a peak after which decreases, repeating this cycle. It’s easy and efficient for early-stage studying.Triangular2: Much like the triangular sample, however halves the height LR in the beginning of every new cycle, creating finer tuning over time.Exponential: The LR oscillates inside a lowering vary, which may stabilize studying in later levels of coaching.

The Want for Cyclical Studying Charges in Pc Imaginative and prescient

Pc imaginative and prescient duties, like picture classification, object detection, and segmentation, require deep neural networks educated on massive datasets. Nevertheless, such duties current challenges:

Advanced Loss Landscapes: Deep neural networks typically have extremely advanced loss landscapes, with many native minima and saddle factors.Danger of Overfitting: Extended coaching on massive information can result in overfitting, the place the mannequin turns into too particular to coaching information and loses generalization means.Tuning Challenges: Choosing a single, superb LR that balances pace and accuracy for the complete coaching course of is tough.

Utilizing cyclical studying charges helps deal with these challenges by enabling:

Exploration and Avoidance of Native Minima: Oscillating LRs encourage the mannequin to flee native minima or saddle factors, the place studying would possibly in any other case stagnate.Quicker and Secure Convergence: Common boosts to the LR enable the mannequin to discover the loss floor extra aggressively, resulting in sooner studying whereas additionally stopping instability.Robustness and Improved Generalization: By various the LR over cycles, CLRs can assist cut back overfitting and enhance the mannequin’s efficiency on unseen information.

Implementing CLR in Pc Imaginative and prescient Fashions

Hottest deep studying frameworks, equivalent to PyTorch, Keras, and TensorFlow, assist cyclical studying charges. Let’s go over implementations for these frameworks:

Keras Implementation:

from tensorflow.keras.callbacks import CyclicLR

clr = CyclicLR(base_lr=0.001, max_lr=0.006, step_size=2000, mode=”triangular”)

mannequin.match(X_train, y_train, epochs=30, callbacks=[clr])

PyTorch Implementation:

from torch.optim import Adam

from torch.optim.lr_scheduler import CyclicLR

optimizer = Adam(mannequin.parameters(), lr=0.001)

scheduler = CyclicLR(optimizer, base_lr=0.001, max_lr=0.01, step_size_up=2000, mode=”triangular”)

for epoch in vary(epochs):

practice(mannequin, optimizer) # practice step

scheduler.step() # replace studying charge

Learn how to Select CLR Parameters

Choosing applicable CLR parameters is essential to get essentially the most profit:

Base and Max Studying Charges: Begin with a decrease base charge (e.g., 1e-5) and select an higher charge that permits substantial studying (e.g., 1e-2 or 1e-3, relying on the mannequin and dataset). A easy trick to estimate the max LR is to run a fast check utilizing an exponentially rising LR till the loss stops lowering.Cycle Size (Step Measurement): For laptop imaginative and prescient duties, a typical cycle size can vary from 500 to 10,000 iterations, relying on the dataset dimension and mannequin. Shorter cycles could be useful for bigger datasets, whereas longer cycles work properly for smaller datasets.

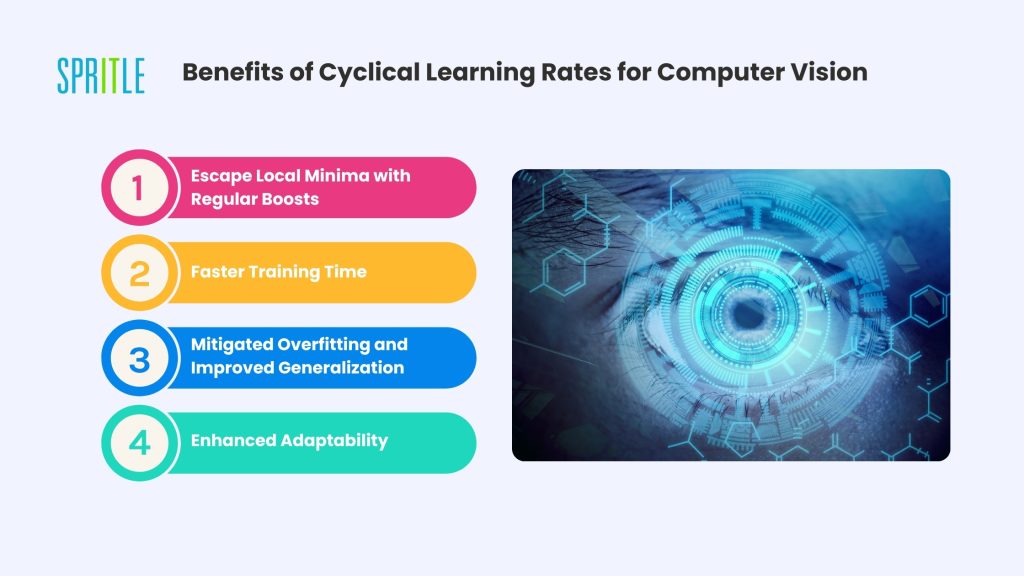

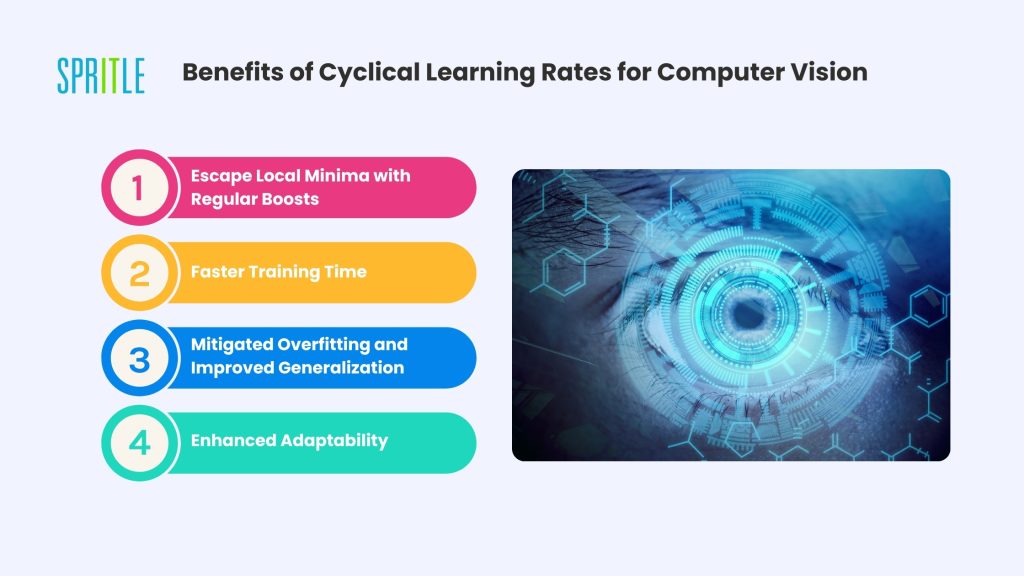

Advantages of Cyclical Studying Charges for Pc Imaginative and prescient

Escape Native Minima with Common Boosts: Deep networks in laptop imaginative and prescient can encounter native minima and saddle factors because of the advanced nature of picture information. By biking the educational charge, fashions are periodically “nudged” out of potential traps, doubtlessly touchdown in a greater world minimal.Quicker Coaching Time: As a result of the educational charge periodically will increase, the mannequin explores the loss floor extra aggressively, resulting in sooner convergence. For big laptop imaginative and prescient datasets, this could imply diminished coaching time and fewer assets required.Mitigated Overfitting and Improved Generalization: In laptop imaginative and prescient duties, fashions are liable to overfitting, particularly when educated for a lot of epochs. By oscillating the LR, cyclical schedules maintain the mannequin from getting “comfy” with one sample of studying, which helps it generalize higher to unseen information.Enhanced Adaptability: Since CLR schedules enable a variety of LRs, they cut back the necessity to tune a single, superb LR. This flexibility is useful when utilizing different datasets with advanced picture options, the place the best LR could change over time.

Actual-World Examples of CLR in Motion

Picture Classification: In advanced picture datasets like CIFAR-100 or ImageNet, CLR schedules can improve coaching stability and efficiency by enabling fashions to keep away from stagnation within the loss floor. Research have proven that CNNs, equivalent to ResNet and DenseNet, educated with CLR schedules typically attain greater accuracy sooner.Object Detection: In object detection, fashions typically battle with excessive variance because of advanced scenes and a number of objects. Utilizing CLR schedules helps these fashions adapt higher to variability within the information, doubtlessly rising mAP (imply Common Precision) scores.Segmentation: In medical picture segmentation, the place information is restricted and specialised, CLR can assist steadiness exploration and fine-tuning, main to raised boundary detection and have recognition.

Finest Practices When Utilizing CLR in Pc Imaginative and prescient

Begin with Small LR Vary: For delicate fashions, a slender vary of studying charges, equivalent to between 1e-5 and 1e-3, can stop unstable oscillations.Watch the Loss Curve: If the loss curve oscillates too dramatically, the LR vary could also be too broad. Think about lowering the higher boundary.Mix with Regularization: CLR schedules work properly alongside different regularization strategies, equivalent to dropout and batch normalization, serving to fashions generalize extra successfully.

Conclusion: Unlocking New Potential with Cyclical Studying Charges

Cyclical Studying Charges deliver a contemporary strategy to mannequin coaching by permitting studying charges to oscillate dynamically. For laptop imaginative and prescient duties that contain advanced information and require deep studying architectures, this methodology can provide a strong steadiness between fast studying and steady convergence. By adopting CLR schedules, information scientists and engineers can unlock improved efficiency, diminished coaching time, and higher adaptability of their laptop imaginative and prescient fashions. For these seeking to maximize the potential of laptop imaginative and prescient networks, CLR schedules could be a highly effective software within the coaching toolkit.